There is a revolution in photography called "lightfield photography" currently taking place, thanks to a couple of geniuses from Stanford and a new product company they began last year (2011) centered around a studied first published within a PhD thesis by Ren Ng.

His thesis can be read in detail via the following PDF:

Lightfield photography represents a new level of captured information, or captured light data (a.k.a. data about the photons that make any photo a reality,) at the moment a photograph is taken. And while it is easy to make comparisons to the flexibility of those who work in the field of 3D computer simulation and post editing opportunities of such photography produced through light field capturing; (As implied and described within my Nov. 2011 Blog posting: http://themichaelhulmeproject.blogspot.com/2011/11/genius-of-ren-ng-and-lytro.html ) the topic I most want to explore now is the inevitable transition this same philosophy will yield in the utilization of data produce for visual simulations and perceived environments as similar approaches are applied to computer graphics rendering techniques for simulated virtual environments. In order to explore the comparison more thoroughly, I wish to make in more detail, I think it is best if you familiarize yourself with the power and depth, or "richness" of information which is captured within any lightfield moment of photography, and the results a lightfield image "player" is then able to instantly convey once the image file is available. Therefore, I have embedded a few examples directly from Lytro (the makers of the first Light Field consumer camera,) within the page below. The main feature of the player and the images I wish for you to examine is the perspective shifting feature which is now available. To see this feature in action, you simply need to click once on the image, wait for the player to complete an initial processing of the image, then click and hold you mouse button on the image a 2nd time in order to see the "perspective shifting" in action. At this point you will also be able to release the mouse button, and continue manipulating the perspective shift, until you click the image again, to deactivate this mode. Now click within the live Lytro images below to witness the rich nature and depth of Lightfield photography based data and rendering:

|

What I want you to notice while you manipulate the image in this manner, is the amount of data which is revealed that was not visible when only one perspective in viewed. In other words, the potions of the scene which are obscured by foreground objects, until you look around those objects, to reveal more details of the background scene. While it is a subtle reckoning if you will, it is the nature of what is offering depth and a true "3D" opportunity of image manipulation, even within a 2D viewer.

As you view this 3D manipulation, I want you to begin to imagine a world of 3D visualization, that was no longer dependant on tessellated, triangulated, mess geometry. The type of typical methods for 3D modeling which are currently utilized every gaming, real-time and scripted simulation today. The type of calculated and culled geometry which is then draped with the appropriate bitmaps of textures or "shaders", and in some cases offered much more details through further "bitmapped" manipulation of the surfaces within the scene.

In the case of a light field rendering, which is what these players below are offering in real-time, there is no "geometry" required in order to reveal the rich data present within the file.

|

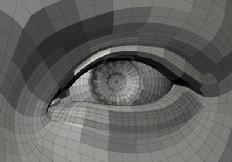

So I feel it is important at this point to consider the HUGE difference in both the initial data utilized, and the method of rendering being exhibited within the freely manipulated image above; its exceptional rich content; and the fact it does NOT depend on any of the conventional approaches to depicted rendering, geometric data or displaying of the desired scene; when compared to those typically utilized today and depicted through the geometric reconstruction of a scene such as this example of a traditional, 3D modeled eye, shown here on the right.

|

|

| Traditional 3D Wireframe Geometry |

|

|

As the computer science of simulating virtual environments advances, and the nature of not only the construction of those virtual environments, but also the realistic rendering of them, advances far beyond the confines of the manual 3D construction of a scene, followed by the draping of photo realistic textures; which are then further manipulated through simulated light and the complex portrayal of lens and depth of field; we soon see an advancement far beyond these conventional methods of construction and Physics we have so capably imitated with today's methods; and soon we'll realize that our computers do not require the same conventions for construction and rendering which has served us so well up to this point.

|

You can also listen to an interview recorded in the summer of 2011, with the Director of Photography with Lytro, as he explains a bit about the process of using thier consumer device and th nature of such Photography.

(Sourced from: https://www.lytro.com/learn )

|

There is more than one aspect of this future of rendering. A new paradigm in modeling, and a new paradigm in depicting the information stored within the desired environment's database. In fact the abstract requirements of the render engine, are in part what allow for a more abstract definition of the scene database, and visa versa.

In the case of defining the scene, or creating the scene database; plausibilities of the requested environment are compared to those of the "real world", or at least descriptions of it, made instantly available to us through the internet, via, images, art, and video. This definition is then piped through a rendering engine capable of making its own assumptions of scene depiction as it also utilizes its' knowledge of Physics, light photons, and scene interaction to draw a cohesive conclusion of the result.

Just as much of Computer Graphics has been the effort of utilizing science to imitate art. Or in many photo-realistic simulations; utilizing art (made from science) to imitate life. In this next evolution of modeling and rendering a more conceptual and perceptual opportunity will become the goal of the software.

Picasso once criticized the nature of certain audience or critics, trying to "understand art", with the following quote: ""Everyone wants to understand art. Why not try to understand the song of a bird?...people who try to explain pictures are usually barking up the wrong tree."

But in fact, as whenever we teach a computer to more accurately depict the world around us, or more accurately act as if it is taking a picture of a scene (rendering a still) or acting as if it serves as a dolly and a full motion camera (when rendering full-motion, scripted or real-time simulations,) we are in essence interpreting not only art, but also the physics of light, lens, mechanics and even biology *in the case of inverse kinematics, or motion datasets, applied to live characters.)

|

So just as Picaso found purpose in taking his finely honed craft of painting and usage of various mediums and paint to another level, another dimension through the expression demonstrated within his abstract art; our method of modeling and rendering will have to venture well beyond the confines of our current standards of the definition and depiction of a scene in order to significantly advance.

An easy comparison that might be made in the realm of software and scene creation for CG could be drawn by comparing the software development tools of today's versus those of 20 years ago. Had you told a programmer 20 years ago, that "object orientated code" would be the norm. Or presented the idea that a software dev tool, would be comprised of so many, pre-defined "libraries" or subroutines, divided into classes and easily represented within a simple diagrammatic flowchart, to then automatically reveal the actual code, they worked so hard to manually achieve, none of them would have believed you. Not to mention the fact, that at the time, eliminating the craft of understanding how to logically connect and distribute the function of each subroutine, would have been even more unlikely.

To this end, you must now picture taking a "Modeler" or scene builder utilizing today more conventional methods of defining a scene (for one thing this craft's methodology is also contingent on the nature in which they understand the scene will be rendered.) no take this person to the modeling studio of the future, where few thoughts are offered towards the dimensions of a room, the color of an animal, the realism of a texture or shader library. Any modeler or craftsman utilizing today's tools would simply say, "that is not "real" modeling." and to this same end this would have been the same response a programmer of 20 years ago, would have quipped about the development tools, and subsequent techniques afforded today software developers.

So how do you make the jump from Basic -to- Visual Basic? Or from Line-by-line ASCII / hand typed, manually authored lines of Code and custom designed logic operands & subroutine relationships - to the natural and successful, efficient opportunities now available to software developer through Object Orientated Code?

On a more simple basis, ask yourself; do you have to know how to spell well, or at all, in order to type a grammatically accurate letter on a computer? The answer is "no". Do you have to know how to manually code each line of your software, in order to develop a software tool anymore? The answer is "no". Will 3D modeler and simulation database creators, have to know how to 3D model in the future in order to create accurate depictions of their scenes? The answer is "no".

The future 3D Modeler will serve as a "Describer". The internet or some perhaps locally curated, massive information database; on which might have been narrowed in its coverage to focus more on the topics and goals of the virtual environment a studio or discipline will require to be accessible by the "Description Depiction Engine" (or DDE). The resulting scene of models constructed by the "Describer" are then rendered through a "Plausibility Projection - Coalescence Engine" (or PP-CE), and later lit based on "Virtual Photonics - Object Emissions" (or VP-OE) which are translated into a "Light Field Stream" (or LFS) and rendered as either 2D or 3D final visual results of both visial and non-visible photons for the end user..

Vast Object libraries (or VOL's) and their associated Comparison Engines (or CE's) are the key filters for determining the viability and eradicable value of the varied categories and specific assets needed to assist in the creation of the Describer's defined virtual environments. (Both the VOL's and the CE's are assets within the DDE.)

The PP-CE (a.k.a. Plausibility Projection - Coalescence Engine) must then quickly determine the relationship, influence and limitations each presented object, data-set and property will offer to the overall combination of objects as characteristics and additional properties or objects are added and/or become influential to the scene as they are "described".

One the results of the DDE (a.k.a. Description Depiction Engine") roll is to guide, curate and manifest the plausibility, feasibility, accurate references or constraints if you will of each described scene; assisting every step of the way towards the reasonable or even abstract nature of the most likely intention and results for the scene definition being continually and creatively in many cases defined by the "Describer" (a.k.a. the 3D modeler of the future,) Whether you look at eh Comparison Engines use of he Vast Object Libraries as the definer, refiner or restricted of the desired outcome, in either event the final result is combined and displayed in a rudimentary format, (not unlike the vast difference between wire-frame and photo-realistic renderings, only this time, a vector of the crude rendering is not the caveats being displayed,) via the "Description Depiction Engine". should not embody are key in the future of contextual, depicted, light filed inspired rendering.

|

Allowing a readily available database of the world, to actively serve as the parametric basis for the resulting compilation of a scenes intention, which is initially inspired simply through the a verbal explanation of the goal is a radical approach to deductive and contemplative scene creation and modeling. An approach which will require the continual availability and access tot eh very information which has become ubiquitous to our way of lives through our daily use and dependency of the internet In this same way, our searches are automated, results are combined, useless feedback is discarded as final useful parametric results are resourceful results are cunningly presented via the DDE to serve the request of the visual we are wishing to create.

So did the creators of the Matrix have it somewhat right when they portrayed those who directly peered into the Matrix, as by-standards who only benefited from an abstract version of reality?

|

Are we to view an abstract representation of the Virtual Environment within the system? And will it be to our advantage to let go of the labor intensive conventional modeling methods, in favor of more abstract representation, "Described", and inttrusted to the AI and coordination of Vast Object libraries and their associated Comparison Engines?

I guess we'll wait and see...

|

|